This is a longer think piece from the quick post I had on Mastodon the other day.

Every time someone floats the idea that Apple should acquire Perplexity to “supercharge” its AI efforts, I get whiplash, not just from the sheer strategic laziness of the suggestion, but from the deeper cultural misalignment it completely ignores. The very idea is a perplexing thought.

Perplexity isn’t some misunderstood innovator quietly building the future. It’s a company fundamentally unsure of what it is, what it stands for, or how to exist without parasitizing the open web. It’s been posing as a search engine, an AI-powered Q&A tool, a research assistant, and lately, some vague hybrid of all three, depending on who’s asking and what narrative sounds hottest that week. The only throughline is this: a constant need to justify its own existence, retrofitting its product pitch to whatever the industry is currently foaming at the mouth about.

And then there’s the CEO.

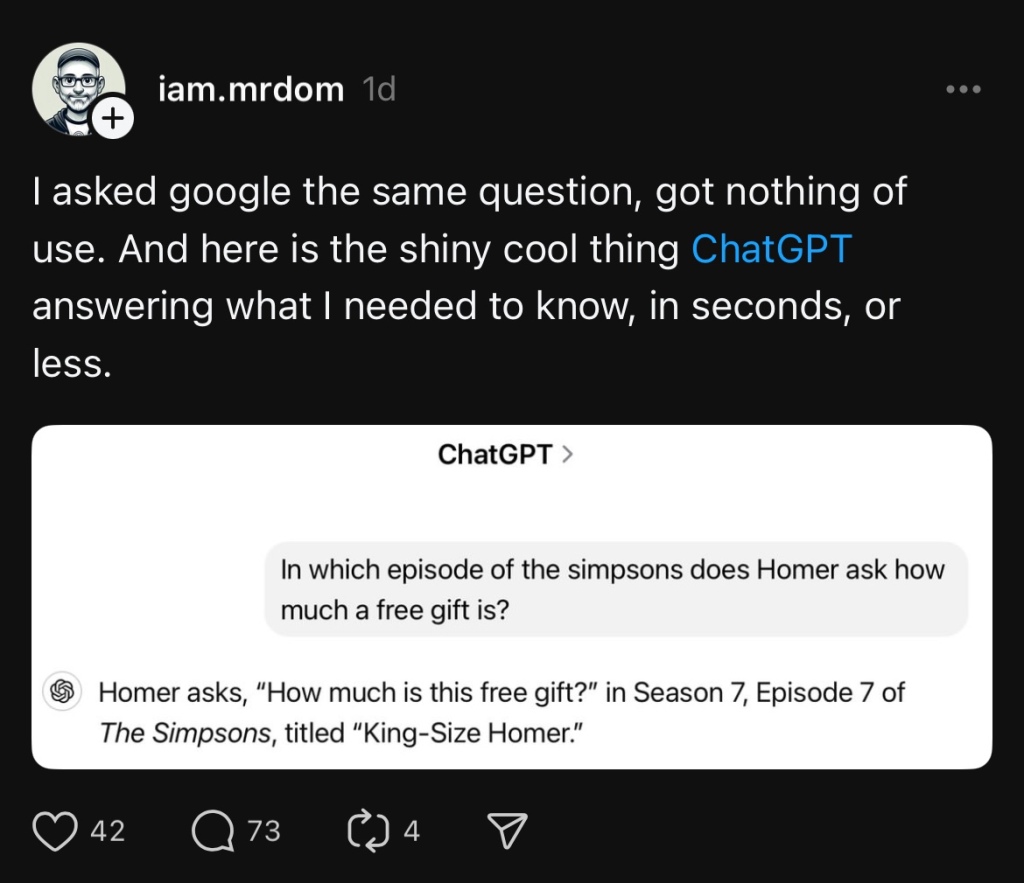

Perplexity CEO Aravind Srinivas has made a habit of saying the quiet parts out loud, and not in a refreshing, brutally honest way, but in a way that suggests he hasn’t thought them through. Case in point: TechCrunch Disrupt 2024, where he was asked point blank to define plagiarism and couldn’t answer. Not didn’t answer. Couldn’t. That wasn’t just a missed PR opportunity. That was a red flag, flapping violently in the face of a company that scrapes content from other publishers, slaps a “summarized by AI” badge on it, and tries to call that innovation.

When you can’t define plagiarism as the CEO of a company built on other people’s work, that’s not strategic ambiguity, that’s an ethical void. And it’s telling. Perplexity has made a business of riding the razor-thin line between fair use and flat-out theft, and they want the benefit of the doubt without the burden of responsibility.

Which is where the Apple comparisons get absurd.

Yes, Apple stumbled. For more than a decade, Siri was a rudderless ship, a clunky commuter train in an age where everyone else was racing to build maglevs. The company completely missed the LLM Shinkansen as it rocketed past, leaving Siri coughing in the dust. What followed was a scramble, an engine swap mid-ride, and the painful attempt to retrofit a creaky voice assistant into something worthy of generative AI expectations.

That failure — public, prolonged, and still unresolved — gave the impression that Apple had no idea what was coming. That they were too slow, too self-contained, and too arrogant to evolve. And to some extent, that criticism landed. The year-long silence after ChatGPT’s breakout moment painted Apple as unprepared, reactive, even out of touch.

But here’s the thing: while Apple still hasn’t shown much of anything tangible since the Apple Intelligence announcement at WWDC 2024 (Genmoji? Really? Messed up email and notification summary?), the signals are clear. The company has changed course. They’ve acknowledged they’re behind and now they’re moving, quietly but with force. Once Apple has its engineering machine locked onto a target, the company doesn’t need to acquire noisy, erratic startups to plug the gaps. What it needs is time. And direction. And both are now in motion.

Which brings us back to Perplexity. Apple doesn’t need it. Not for the tech — which is just a UX layer on top of open models and scraped data. Not for the team — which seems more interested in testing the boundaries of IP law than building products people trust. And definitely not for the culture — which is allergic to accountability and powered by vibes over values.

Apple’s entire value proposition is control: of the user experience, of the ecosystem, and of the narrative. Perplexity brings chaos. Unapologetically so. It doesn’t have a sustainable moat, a mature product, or a north star. It has hype. It has press. And it has the moral compass of a company that thinks citation is a permission slip to republish everyone else’s work for free.

If Apple wants a better search experience, it can build one, with privacy built in, on-device processing, and full-stack integration. If it wants a smarter assistant, it can leverage its silicon and software in ways that Perplexity simply can’t touch. What it doesn’t need is a cultural virus from a startup that treats copyright like a rounding error and ethics like an optional plugin.

So no, Apple shouldn’t buy Perplexity. Not because it can’t. But because it finally knows what it needs to build, and it’s building it the Apple way. At least that’s what I think they’re doing.