Between fast and correct ChatGPT will always choose fast because it’s not programmed or trained to say it doesn’t have the answer. ChatGPT is trained to respond confidently and as a result will always provide you with an answer regardless whether the answer is false or factual.

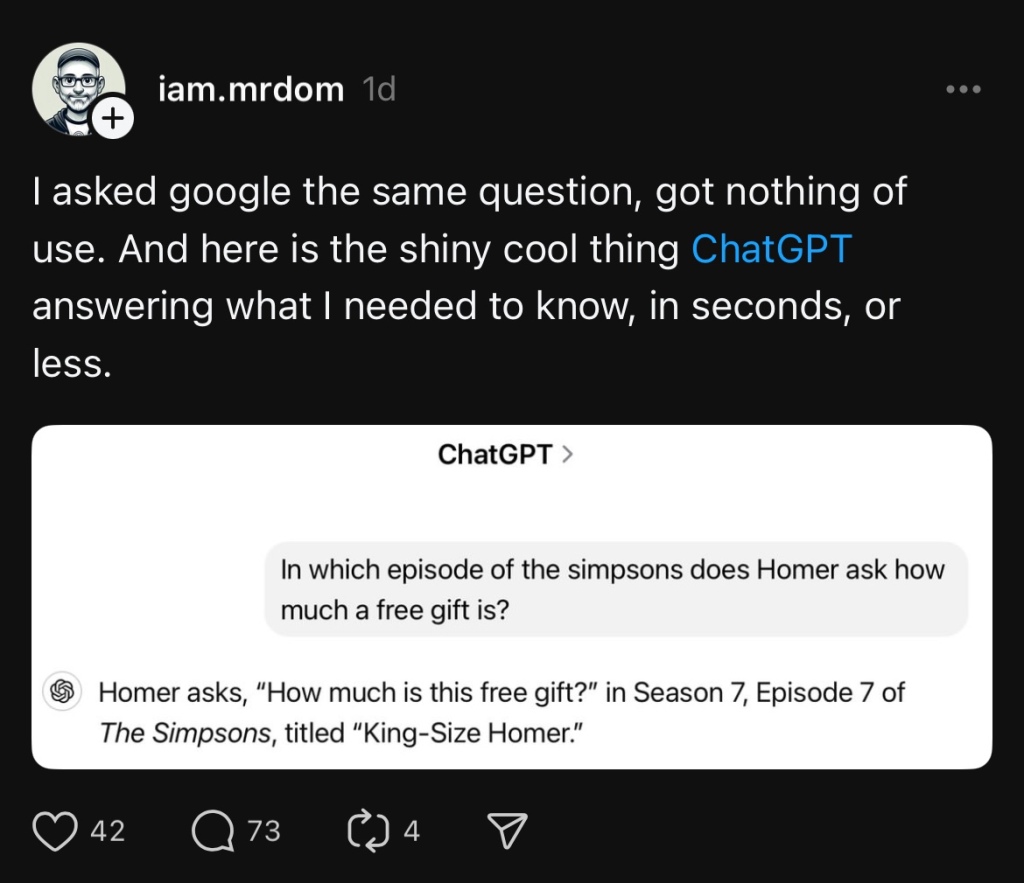

In this example ChatGPT was asked in what episode of The Simpsons did Homer Simpson ask how much is a free gift. ChatGPT confidently answered episode 7 of season 7, King Size Homer.

That episode was indeed titled King Size Homer but it’s not the episode where Homer asked the question. That episode was The Joy of Sect, season 9 episode 13 as confirmed by IMDb.

Given that a chatbot built upon a Large Language Model (LLM) would be beneficial for IMDb to have, maybe it’s just a matter of time before they build one for themselves. After all, search seems to be making way for chatbots or voice queries lately. There is however, a caveat, which I will get to in a second.

If you enter “Homer how much free gift” into YouTube’s search bar, it will give you this video as the first answer. Granted it only says the episode title, number, and season in the video description, which can easily be incorrect or even blank but cross referencing that with IMDb confirmed it’s the correct one.

If you search on Google, it may suggest to you the more accurate quote to search for which should give you the same YouTube video at or near the top of the search results. Google 1, ChatGPT 0.

ChatGPT may well be the most advanced LLM chatbot today but it’s a language model designed and trained to deliver the most grammatically or syntactically correct response according to the material it has been trained on. It is not trained to provide the correct answer to queries because it’s not meant to be a search engine or database that you submit queries to.

While ChatGPT does not verify or fact check its responses, other models may be trained to find the correct answer and infer the answer from information it comes across online.

If ChatGPT happens to provide the correct answer it’s only because the answer is contained in the training material with the relevant context which it manages to pull out. In other words correct answers are delivered by chance without verification. How many times have you realized it was lying to you and you had to ask why it gave you the wrong answer or to check whether the information it gave you is correct?

Remember last year when everyone was reporting that ChatGPT had passed the bar exam (lawyer’s exam) in the top 10%? It actually didn’t. It was more like in the top 69% to 48% depending on the peers or cohorts. The grading method was flawed.

Again, ChatGPT is not an information database but it does know how to form correct sentences, write in certain styles, translate texts, and help you with your coding. Maybe one day it may become an information database but today, with GPT 3.5, 4.0, and 4o, is not that day.

Use ChatGPT as a writing or coding assistant and you’re probably golden but you still have to play the role of an editor to make sure it gives you the correct output for you needs.